| Home | Partners | Management | Activities | Seminars | Courses |

Previous COMPUTE seminars

Jens Saak (Max Planck, Magdeburg),

Sustainable development of research software

Tuesday April 21, 14.15, online via zoom

Developing computational approaches in microscopy

Carolina Wählby, Uppsala University

Friday 24 January 2020, 14.00 - 15.00

Lundmarksalen, astronomihuset

Modelling crowd evacuation

Enrico Ronchi, Division of Fire Safety Engineering, LTH.

Monday November 18 2019, 14.15-15.00

Lundmarksalen, astronomihuset

Detecting solar system objects with convolutional neural networks

Maggie Lieu (European Space Agency)

Monday May 6th 2019, 14.00

Lundmarksalen, astronomihuset

Joint COMPUTE/AIML seminar

With upcoming big astronomical surveys such as LSST and Euclid imaging enormous volumes of the sky at high speeds, the detection of asteroids, comets and other moving bodies with traditional methods will not be feasible. Machine learning methods such as convolutional neural networks can help us to sift through the large amounts of data and quickly identify objects of interest for follow up observation and tracking. In this talk I will show how CNNs can be trained to effectively identify asteroids and other astronomical objects in an unbiased manner even when the training data sample is small.

Cognitive Discovery: How AI is Changing Technical R&D

Costas Bekas (IBM Research, Zurich)

Tuesday March 26 2019, 14.00

Lundmarksalen, astronomihuset

Joint COMPUTE/AIML event

Abstract: Cognitive Discovery (CD) is an AI-based holistic framework designed to massively accelerate technical R&D. It introduces novel AI technologies to ingest and represent complicated technical knowledge and know-how, sift through oceans of data and drive technical hypothesis discovery and verification by clever design of simulations. Real market deployments of CD have demonstrated at least an order of magnitude improvement in discovery rates of new technologies such as chemicals and materials, while in addition driving the creation and curation of corporate deep technical memory.

Speaker biography Dr. Costas Bekas is managing the Foundations of Cognitive Solutions group at IBM Research-Zurich. He received B. Eng., Msc and PhD diplomas, all from the Computer Engineering & Informatics Department, University of Patras, Greece, in 1998, 2001 and 2003 respectively. Between 2003-2005, he worked as a postdoctoral associate with prof. Yousef Saad at the Computer Science & Engineering Department, University of Minnesota, USA. He has been with IBM since September 2005. Costas's main research interests span AI, massive scale analytics and energy aware algorithms and architectures. Costas is a recipient of the PRACE 2012 award and the ACM Gordon Bell 2013 and 2015 prizes.

Monday March 18 2019, 15.15

Andrew Winters (Mathematical Institute, University of Cologne): Stability in High-Order Numerics

Abstract: Nature is non-linear. Many fundamental physical principles such as conservation of mass, momentum, and energy are mathematically modeled by non-linear time dependent partial differential equations (PDEs). Such non-linear conservation laws describe a broad range of applications in science and engineering, e.g., the prediction of noise and drag from aircraft, the build up and propagation of tsunamis in oceans, the behavior of gas clouds, and propagation of non-linear acoustic waves in different materials.

In practice, marginally resolved simulations of non-linear conservation laws, such as the compressible Navier-Stokes equations or the ideal magnetohydrodynamics (MHD) equations, reveal that high-order methods are prone to aliasing instabilities. This can lead to total failure and breakdown of the algorithms. Aliasing issues are introduced and intensified by a combination of insufficient discrete integration precision, collocation of non-linear terms, polynomial approximations applied to rational functions, and, again, by insufficient grid resolution. The aim of this talk is to discuss a remedy for such aliasing issues and present its connection to discrete variants of the product and chain rules. As such, we construct nodal high-order numerical methods for non-linear conservation laws that are entropy stable. To do so, we focus on particular approximate derivative operators used to mimic steps from the continuous well-posedness PDE analysis on the discrete level and enhance the robustness of the high-order numerics.

6 December 2018

Benjamin Ragan-Kelley (Simula Research Laboratory):

Jupyter: facilitating interactive, open, and reproducible

science

Friday 16 November 2018

Björn Nystedt (NBIS):

Presentation of NBIS — National Bioinformatics Infrastructure Sweden

21 September 2018

Steven Longmore (Liverpool John Moores University):

Using machine learning to identify animals from drones

Video can be found here.

Abstract:

The World Wildlife Fund for Nature (WWF) estimates that up to five species of life on our planet become extinct every day. This astonishing rate of decline has potentially catastrophic consequences, not just for the ecosystems where the species are lost, but also for the world economy and planet as a whole. Indeed, biodiversity loss and consequent ecosystem collapse is commonly listed as one of the 10 foremost dangers facing humanity, and most pressingly in the developing world. There is a fundamental need to routinely monitor animal populations over much of the globe so that conservation strategies can be optimized with such information. The challenge faced to meet this need is considerable. To date most monitoring of animal populations is conducted manually, which is extremely labour-intensive, inherently slow and costly. Building on technological and software innovations in astronomy and machine learning, we have developed a drone plus thermal infrared imaging system and an associated automated detection/identification pipeline that has the potential to provide a cost-effective and efficient way to overcome this challenge. I will describe the current status of the system and our efforts to enable local communities in developing countries with little/no technical background to run routine monitoring and management of animal populations over large and inhospitable areas and thereby tackle global biodiversity loss.

8 May 2018

Alice Quillen (University of Rochester):

Astro-viscoelastodynamics or Soft Astronomy: Tidal encounters,

tidal evolution and spin dynamics

Abstract:

Mass spring models, originally developed for graphics and

gaming applications, can measure remarkably small deformations while

conserving angular momentum. By combining a mass spring model with an

N-body simulation, we simulate tidal spin down of a viscoelastic moon,

directly tying simulated rheology to orbital drift and internal heat

generation. I describe a series of applications of mass spring models

in planetary science. Close tidal encounters among large

planetesimals and moons were more common than impacts. Tidal

encounters can induce sufficient stress on the surface to cause large

scale brittle failure of an icy crust. Strong tidal encounters may be

responsible for the formation of long chasmata in ancient terrain of

icy moons such as Dione and Charon. The new Horizons mission

discovered that Pluto and Charon's minor satellites Styx, Nix,

Kerberos, and Hydra, are rapidly spinning, but surprisingly they have

spin axis tilted into the orbital plane (they have high obliquities).

Simulations of the minor satellites in a drifting Pluto-Charon binary

system exhibit rich resonant spin dynamics, including spin-orbit

resonance capture, tumbling resonance and spin-binary resonances. We

have found a type of spin-precession mean-motion resonance with Charon

that can lift obliquities of the minor satellites in the Pluto/Charon

system.

12 April 2018

Paul-Christian Bürkner (University of Münster):

Why not to be afraid of priors (too much)

Talk given at Bayes@Lund 2018. Click here for videos and slides from the event.

26 March 2018

Martin Turbet (Laboratoire de Météorologie Dynamique, Paris):

Exploring the diversity of planetary atmospheres with Global Climate Models

Abstract:

More than 50 years ago, scientists created the first Global Climate Models

(GCMs) to study the atmosphere of the Earth. Since then, the complexity and

the level of realism of these models (that can now include the effect of

oceans, clouds, aerosols, chemistry, vegetation, etc.) have considerably

increased. The large success of these models have recently motivated the

development of an entire family of GCMs designed to study extra-terrestrial

environments in our solar system (Venus, Mars, Titan, Pluto) and even beyond

(extrasolar planets).

I will first show various GCM applications on Venus, Mars, Titan and Pluto.

Solar system GCMs successes and sometimes failures teach us useful lessons to

investigate and possibly predict the possible climates on planets where no

(or almost no) observations are available. I will then present several examples

of studies recently performed using a 'generic' Global Climate Model developed

at the Laboratoire de Météorologie Dynamique in Paris, designed to explore the

possible atmospheres and the habitability of ancient planets or extrasolar

planets.

1 December 2017

Bojana Rosić (TU Braunschweig): Uncertainty quantification in a Bayesian setting

Abstract:

Concrete and human bone tissue are typical examples of materials which exhibit

randomness in the mechanical response due to an uncertain heterogeneous

micro-structure. In order to develop an appropriate probabilistic macro-scale

mathematical description, the essential step is to address the material as well

as possible other sources of uncertainties (e.g. excitations, change in geometry

, etc.) in the model. By extending already existing deterministic models derived

from Helmholtz free energy and the dissipation functions characterising

ductile or quasi-brittle behaviour, the goal of this talk is to identify and

quantify

uncertainty in the system response. For this purpose a Bayesian probabilistic

setting is considered in which the modeller's a priori knowledge about the model

parameters and the available set of data obtained by experiments are taken into

account when identifying the corresponding probability distribution functions

of unknown parameters. Identification in the form of Bayesian inverse

problems -

in particular when experiments are performed repeatedly - requires an

effcient solution and representation of possibly high dimensional probabilistic

forward problems, i.e. the estimation of the measurement prediction given prior

assumption. An emergent idea is to propagate parameter uncertainties through

the model in a Galerkin manner in which the solution of the corresponding

differential

equations is represented by a set of stochastic basis polynomials, the

cardinality of which grows exponentially. To allow an effcient solution of

high-dimensional

problems this talk will present the new low-rank Galerkin schemes

combined with Bayesian machine learning approaches.

30 May 2017

Michel Defrise (Vrije Universiteit, Brussel): Statistical reconstruction methods in medical imaging

Abstract:

Statistical reconstruction methods are probably today the most common methods to obtain tomographic images from SPECT/PET measurements. The methods are based on modelling the camera systems and thereby iteratively find a good estimate of the internal radionuclide distribution from the similarity of the calculated and measured projection data. The main advantage is here that if physical problems, associated with the measurement of the radiation, such as, non-homogeneous photon attenuation, contribution from scattered photons, partial volume problems due to collimator resolution, septal penetration etc, can be modelled accurately then this naturally comes out as a compensation for the problems. The lecture will cover the fundamental mathematical parts of the procedures describe above.

14 November 2016

INTEGRATE meeting -- see the INTEGRATE website for more details.

26 October 2016

INTEGRATE math-hack day -- see the INTEGRATE website for more details.

5 October 2016

INTEGRATE hack day -- see the INTEGRATE website for more details.

26 September 2016

INTEGRATE meeting -- see the INTEGRATE website for more details.

11 May 2016

INTEGRATE meeting -- see the INTEGRATE website for more details.

25 April 2016

INTEGRATE math-hack day -- see the INTEGRATE website for more details.

12 April 2016

Patrick Farrell (Oxford University): Automated adjoint simulations with FEniCS and dolfin-adjoint

Abstract:

The derivatives of PDE models are key ingredients in many important algorithms of computational science. They find applications in diverse areas such as sensitivity analysis, PDE-constrained optimisation, continuation and bifurcation analysis, error estimation, and generalised stability theory.

These derivatives, computed using the so-called tangent linear and adjoint models, have made an enormous impact in certain scientific fields (such as aeronautics, meteorology, and oceanography). However, their use in other areas has been hampered by the great practical difficulty of the derivation and implementation of tangent linear and adjoint models. Naumann (2011) describes the problem of the robust automated derivation of parallel tangent linear and adjoint models as ``one of the great open problems in the field of high-performance scientific computing''.

In this talk, we present an elegant solution to this problem for the (common) case where the original discrete forward model may be written in variational form, and discuss some of its applications.

11 April 2016

INTEGRATE hack day -- see the INTEGRATE website for more details.

14 March

INTEGRATE meeting -- see the INTEGRATE website for more details.

12 October 2015

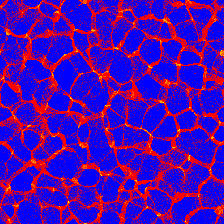

Volker Springel (Heidelberg Institute for Theoretical Studies): Cosmic structure formation on a moving mesh

Abstract:

Recent years have seen impressive progress towards hydrodynamic

cosmological simulations of galaxy formation that try to account for

much of the relevant physics in a realistic fashion. At the same time,

numerical uncertainties and scaling limitations in the available

simulation codes have been recognized as important challenges. I will

review the state of the field in this area, highlighting a number of

recent results obtained with large particle-based and mesh based

simulations. I will in particularly describe a novel moving-mesh

methodology for gas dynamics in which a fully dynamic and adaptive

Voronoi tessellation is used to formulate a finite volume

discretization of hydrodynamics which offers numerous advantages

compared with traditional techniques. The new approach is fully

Galilei-invariant and gives much smaller advection errors than

ordinary Eulerian codes, while at the same time offering comparable

accuracy for treating shocks and an improved treatment of contact

discontinuities. The scheme adjusts its spatial resolution to the

local clustering of the flow automatically and continuously, and hence

retains a principle advantage of SPH for simulations of cosmological

structure growth. Applications of the method in large production

calculations that aim to produce disc galaxies similar to the Milky

Way will be discussed.

16 April 2015

Derek Richardson (University of Maryland): Asteroids: Modeling the future of space exploration

Abstract:

Over the past 2 decades since the first spacecraft images were returned from an asteroid, we have learned that these potentially hazardous objects not only often support satellites but are likely themselves loose collections of fragmented material. Numerical simulations assist in understanding the evolution of these leftover building blocks of the terrestrial planets, planning for the mitigation of their threat to Earth, and for advising space mission designers on the challenges of sample return and possible astronaut landing. Our group uses an adapted high-performance parallelized gravity tree code (PKDGRAV) to simulate gravitational and collisional processes among small bodies in space. Basic features of the code will be presented, along with their application to selected topics in small-body evolution. The talk will feature our latest simulations of granular flow in microgravity, a key tool for modeling sample-return mechanisms.

23 March 2015

Thomas Neuhaus (Jülich Supercomputing Centre): Quantum computing via quantum annealing from a physics point of view

Abstract:

I present an overview on the theory of quantum annealing for the use of solving so called intractable mathematical problems. I will introduce satisfiability problems consisting of a number of simple constraints (clauses) on a set of Boolean variables (e.g., 2SAT and 3SAT) and report on today's knowledge on the usefulness of quantum annealing in such theories. I will also give an introduction to the existing Dwave Quantum Computer and present a few computer experiments on the machine. As of today theoretical physics has not yet identified a class of mathematical problems, nor physical theories, that really benefits from quantum annealing with certainty. A search for such problems is under way.

16 February 2015

Gregor Gassner (University of Cologne): A massively parallel model for fluid dynamics simulations

Abstract:

In this talk, I will consider the numerical simulation of non-linear advection-diffusion problems, particularly of the compressible Navier-Stokes equations used to model turbulent fluid flows in engineering applications and problems appearing in natural sciences.

For this, we develop stable and accurate methods for conservation laws and incorporate other important physical aspects, such as e.g. entropy stability. Examples include the Burgers equation, the shallow water equations, Maxwell's equations and the Euler equations.

A special emphasis is on the computational implementation of these mathematical models such that they are well suited for realistic applications. Due to the multi-scale character of the considered problems, a large amount of spatial and temporal resolution is needed for an accurate approximation. The number of spatial degrees of freedom are as high as one billion with over one hundred thousand time steps. Such simulations are only feasible, when the power of today's largest supercomputers are unleashed.

I will present a special parallelization strategy which is tailored directly to the mathematical model in use and allows simulations on over one hundred thousand cores with near perfect parallel efficiency. Scaling results on different machines are demonstrated, where the absolute limit of MPI is tested with only one grid cell left on a processor.

At the end of the talk, some applications simulated with this framework are illustrated.

15 December 2014

Anna-Karin Tornberg (KTH): Accelerated boundary integral simulations of particulate and two-phase flows

Abstract:

In micro-fluidic applications where the scales are small and viscous effects

dominant, the Stokes equations are often applicable. The suspension dynamics

of fluids with immersed rigid particles and fibers are very complex also in

this Stokesian regime, and surface tension effects are strongly pronounced

at interfaces of immiscible fluids - such as surfaces of water drops in oil.

Simulation methods can be developed based on boundary integral equations, which leads to discretizations of the boundaries of the domain only, and hence fewer unknowns compared to a discretization of the PDE. This involves evaluating integrals containing the fundamental solution (Green's function) for the PDE. This will result in both singular and nearly singular integrals that need to be evaluated, and the construction of accurate quadrature methods is a main challenge. The Green's functions decay slowly, which results in dense or full system matrices. To reduce the cost of the solution of the linear system, an acceleration method must be used. If these two issues - accurate quadrature methods and acceleration of the solution of the linear system - are properly addressed, boundary integral based simulations can be both highly accurate and very efficient.

I will give an introduction to boundary integral methods - discussing the

concepts starting with the simplest formulation for rigid particles, before

discussing the more well-conditioned formulations that we actually use. I

will give the main ideas for a spectrally accurate FFT based Ewald method

developed for the purpose of accelerating simulations, and for quadrature

treatment in two and three dimensions. I will show results for periodic

suspensions of rigid particles in 3D and of interacting drops in 2D.

17 November 2014

Debora Sijacki (University of Cambridge): Simulating galaxy formation: numerical and physical uncertainties

Abstract:

Hydrodynamical cosmological simulations are one of the most powerful tools to

study the formation and evolution of galaxies in the fully non-linear

regime. Despite several recent successes in simulating Milky Way look-alikes,

self-consistent, ab-initio models are still a long way off. In this talk I will

review numerical and physical uncertainties plaguing current state-of-the-art

cosmological simulations of galaxy formation. I will then present global

properties of galaxies as obtained with novel cosmological simulations with

the moving mesh code Arepo and discuss which physical mechanisms are needed to

reproduce realistic galaxy morphologies in the present day Universe.

20 October 2014

Lund University Humanities Lab — Why the humanities needs a lab and what we do in it

Why on earth do the Humanities need a lab?

Tea/coffee

Annika Andersson:

What the recording of brainwaves can tell us about language processing

Marcus Nyström:

What are eye movements? Looking into the elastic eye

Victoria Johansson:

Are we reading during writing? What does the combination of keystroke logging and eyetracking reveal?

Nicolo dell'Unto:

The use of 3D models for intra-site investigation in Archaeology

Abstract:

Lund University Humanities Lab is an interdisciplinary research and training facility whose aim is to enable scholars (mainly) in the humanities to combine traditional and novel methods, and to interact with other disciplines in order to meet the scientific challenges ahead. The Lab hosts technology, methodological know-how, and archiving expertise, and a wide range of research projects. Activities are centered around issues of communication, culture, cognition and learning, but many projects are interdisciplinary and conducted in collaboration with the social sciences, medicine, the natural sciences, engineering, and e-Science locally (Lund University), nationally, and internationally. We start with a brief overview of the lab and its facilities, followed by four short talks exemplifying the kind of research that takes place in the Lab.

27 May 2014

Freddy Ståhlberg (Dept. of Medical Radiation Physics): What is LBIC?

Abstract:

The overall goal of Lund University Bioimaging Center (LBIC) is to pursue in-depth knowledge of human metabolism and function by developing and combining advanced imaging techniques, primarily high-field MRI, PET and SPECT). The goal is accomplished by a step-by-step establishment of the above mentioned bio-imaging center at our University, using already well-established research groups in the field as the core structure.

To achieve this goal, we provide technical platforms, knowledge and support in the development of new diagnostic methods from experimental models, while at the same time taking unmet clinical problems to device patient-specific tailored therapies. Technically, MRI and PET will be advanced both as individual modalities and also towards a merged use. We envision future simultaneous extraction of physiological and molecular information in a multimodal imaging environment for better understanding the impact of molecular events on cellular/tissue behavior. We believe that the development of the Lund University Bioimaging Center will have a significant impact on the research at Lund University. Locally, the center is a powerful resource for translational research at the medical faculty and at Skåne university hospital, with strong connections to planned larger research facilities in Lund, e.g. MAXIV and ESS Scandinavia. On a national and international level, the buildup of the proposed center can be foreseen to become an asset to the whole biomedical research community.

5 May 2014

Martin Rosvall (Umeå University): Memory in network flows and its effects on community detection, ranking, and spreading

Abstract:

It is a paradigm to capture the spread of information and disease with random flow on networks. This conventional approach ignores an important feature of the dynamics: where flow moves to depends on where it comes from. That is, memory matters. We analyze multi-step pathways from different systems and show that ignoring memory has profound consequences for community detection. Compared to analysis without memory, community detection with memory generally reveals system organizations with more and smaller modules that overlap to a greater extent. For example, we show that including memory reveals actual travel patterns in air traffic and multidisciplinary journals in scientific communication. These results suggest that we can use only more data and not more elaborate algorithms to identify real modules in integrated systems.

23 April 2014

Paul Segars (Duke University): The XCAT phantom based on NURBS to create realistic anatomical computer models for use in Medical Imaging simulations

Talks on research involving voxel-based phantoms at Dept of Medical Radiation Physics, Lund University:

1) Michael Ljungberg: Nuclear medicine and the use of voxel-phantoms in Monte Carlo research

2) Gustav Brolin: A national quality assurance study of dynamic 99Tcm-MAG3 renography using Monte Carlo simulations and the XCAT phantom

3) Katarina Sjögreen Gleisner: An introduction to image-based dosimetry

4) Johan Gustafsson:

A procedure for evaluation of accuracy in Image-Based dosimetrical

caculations using the XCAT Phantom

9 December 2013

Thomas Curt (Irstea): Modelling fire behaviour and simulating fire-landscape relationships: Possibilities and Challenges

Abstract:

Fire is one of the major disturbances on the global scale, shaping landscapes

and vegetation, and affecting the global carbon cycle (Gill et al., 2013).

Global changes (land use changes and climate change) are predicted to modify

fire regimes and fire distribution in many parts of the world (Krawchuk et al.,

2009). In this context assessing fire risk and simulating fire-landscape

interactions become crucial to sustainable land management.

In the recent period, important progress has been made to model fire behavior

and to simulate fires on various spatial scales. A variety of models and

simulators now exist, which have specific abilities and limitations (see

http://www.fire.org/). We propose to review some of these models, and to sum up

their purpose, potential, and challenges. Fire behavior models (e.g.

BehavePlus, Firetec) allow simulating fire behavior for almost all ecosystems

using quite simple field data. They also permit to assess fire effects on the

ecosystems, notably postfire tree mortality. Some of these models are fully

physics-based (2D or 3D), while others use empirical equations. They have been

used or adapted to a large array of ecosystems and climate conditions

worldwide, and examples will be presented. Landscape-fire models (e.g. Farsite,

FlamMap) are spatially-explicit and designed to simulate fires with different

weather scenarios in sufficiently realistic landscapes (Cary et al., 2006).

Thus they consider the combined effects of weather, fuels, and topography.

Recently, high performance fire simulation systems have been developed to

permit the simulation of hundreds of thousands of fires. They provides

large-scale maps of burn probability or flame length, thus allowing to assess

which ecosystems and areas are at risk and should be monitored and managed

preferentially. Some applications based on this type of models will be

presented. Coupling fire behavior models with vegetation into Dynamic Global

Vegetation Model (e.g. LPJ, Sierra) allows studying the role of fire

disturbance for global vegetation dynamics. Their application at regional scale

requires an approach which is explicit enough to simulate geographical

patterns, but general enough to be applicable to each vegetation type at large

scales. We shall present how the Sierra model can be used at local scale, in

order to predict vegetation shifts according to different scenarios of future

fire regime.

27 May 2013

Kevin Heng (University of Bern): Exoplanetary Atmospheres and Climates: Theory and Simulation

Abstract:

Exoplanet discovery is now an established enterprise. The next

frontier is the in-depth characterization of the atmospheres and

interiors of exoplanets, in preparation for next-generation

observatories such as CHEOPS, TESS and JWST. In this presentation, I

will review the astronomical, astrophysical and computational aspects

of this nascent field of exoplanet science. I will begin by reviewing

the observations of transit (absorption) and eclipse (emission) spectra

and phase curves, emphasizing the importance of understanding

clouds/hazes. Next, I will review the astrophysical aspects of

understanding exoplanetary atmospheres, including the need to elucidate

the complex interplay between dynamics, radiation and magnetic fields.

Finally, I will discuss the Exoclimes Simulation Platform (ESP), an

open-source set of simulational tools currently being constructed by my

Exoplanets and Exoclimes Group at the University of Bern, reviewing the

technical challenges faced by the ESP team. Advancing the theoretical

state of the art requires a hierarchical (1D models versus 3D

simulations) and multi-disciplinary approach, drawing from astronomy,

astrophysics, geophysics, atmospheric and climate science, applied

mathematics and planetary science.

7 May 2013

Susanna C. Manrubia (Centro de Astrobiología, Madrid): Tinkering in an RNA world and the origins of life

Abstract:

Life arose on Earth some 3.8 billion years ago. Despite tremendous

experimental and theoretical efforts in the last 60 years to uncover how the

first protocells could have originated from abiotic matter, we are still

facing many more unknowns than answers. Two main players in the process are

self-replicating molecules and metabolic cycles, whose emergence requires the

presence of chemical species with the ability to code genetic information and

catalyze chemical reactions. In current living organisms, DNA performs the

former function and proteins the latter. But there is one molecule, RNA, able

to perform both functions. This fact has led to the hypothesis that an ancient

RNA world could have preceded modern cellular organization. That attractive

scenario opens the possibility of carrying out computational studies on issues

such as how RNA molecules could have been selected for simple chemical

functions, what is the probability that such a molecule would arise in

prebiotic environments in the absence of faithful template replication, or how

populations of molecules explore the space of sequences and disclose

evolutionary innovations.

10 December 2012

Bernhard Mehlig (Göteborg University): Turbulent aerosols: clustering, caustics, and collisions

Abstract:

Turbulent aerosols (particles suspended in a turbulent fluid

or a random flow) are fundamental to understanding chemical and kinetic

processes in many areas in the Natural Sciences, and in technology. Examples

are the problem of rain initiation in turbulent clouds, and the problem of

describing the collisions and aggregation of dust grains in circumstellar

accretion disks.

In this talk I summarise recent progress in our understanding of the dynamics of turbulent aerosols. I discuss how the suspended particles may cluster together, and describe their collision dynamics in terms of singularities in the particle motion (so-called caustics).

25 October 2012

Chris Lintott (Oxford University): What to do with 600,000 scientists

Abstract:

'Citizen science' - the involvement of hundreds of thousands of

internet-surfing volunteers in the scientific process - is a radical solution

to the problem of Big Data. The largest and most successful such project,

Galaxy Zoo, has seen volunteers provided more than 200 million classifications

of more than 1 million galaxies, going beyond simple classification task to

make and even follow-up serendipitous discoveries of their own. As PI of

Zooniverse.org, astronomer Chris Lintott leads a team that has enabled

volunteers to sort through a million galaxies, discover planets of their own,

determine whether whales have accents and even transcribe ancient papyri. In

this talk, he will review some of the highlights of their work, explore the

technology needed to keep such a large group of volunteers busy and look

forward to a future when man and machine can work in harmony once more...

7 June 2012

Cristian Micheletti (International School for Advanced Studies, Trieste): Coarse-grained simulations of DNA in confined geometries.

Abstract:

Biopolymers in vivo are typically subject to spatial restraints, either as a

result of molecular crowding in the cellular medium or of direct spatial

confinement. DNA in living organisms provides a prototypical example of a

confined biopolymer. Confinement prompts a number of biophysics questions. For

instance, how can the high level of packing be compatible with the necessity to

access and process the genomic material? What mechanisms can be adopted in vivo

to avoid the excessive geometrical and topological entanglement of dense phases

of biopolymers? These and other fundamental questions have been addressed in

recent years by both experimental and theoretical means. Based on the recent

reviews of refs. [1,2] we shall given an overview of these studies and report

on general simulation techniques that can be effectively used to characterize

the equilibrium properties of coarse-grained models of confined biomolecules.

References:

[1] D. Marenduzzo et al. J. Phys.: Condens. Matter 22 (2010) 283102

http://iopscience.iop.org/0953-8984/22/28/283102/

[2] C. Micheletti et al. Physics Reports, 504 (2011) 1-73

http://www.sciencedirect.com/science/article/pii/S0370157311000640

Download a PDF of the slides here.

Back to Upcoming seminars.

This page was last modified on 18 May 2020.